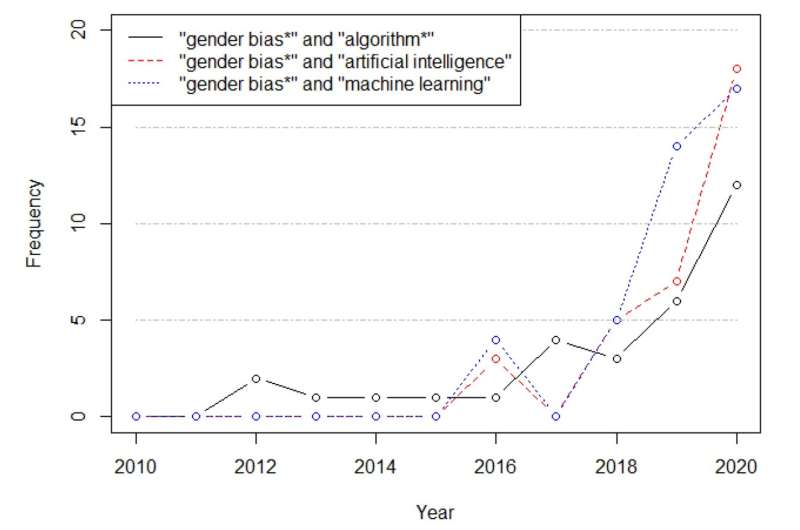

Scopus-indexed articles for antithetic gender-related terms. Credit: Algorithms (2022). DOI: 10.3390/a15090303

Scopus-indexed articles for antithetic gender-related terms. Credit: Algorithms (2022). DOI: 10.3390/a15090303

Endless screeds person been penned connected whether the net algorithms with which we perpetually interact endure from sex bias, and each you request to bash is transportation retired a elemental hunt to spot this for yourself.

However, according to the researchers down a caller survey that seeks to scope a decision connected this matter, "until now, the statement has not included immoderate technological analysis." This caller article, by an interdisciplinary team, puts guardant a caller mode of tackling the question and suggests immoderate solutions for preventing these deviances successful the information and the favoritism they entail.

Algorithms are being utilized much and much to determine whether to assistance a indebtedness oregon to judge applications. As the scope of uses for artificial quality (AI) increases, arsenic bash its capabilities and importance, it becomes progressively captious to measure immoderate imaginable prejudices associated with these operations.

"Although it's not a caller concept, determination are galore cases successful which this occupation has not been examined, frankincense ignoring the imaginable consequences," stated the researchers, whose study, published open-access successful the Algorithms journal, focused chiefly connected gender bias successful the antithetic fields of AI.

Such prejudices tin person a immense interaction upon society: "Biases impact everything that is discriminated against, excluded oregon associated with a stereotype. For example, a sex oregon a contention whitethorn beryllium excluded successful a decision-making process or, simply, definite behaviour whitethorn beryllium assumed due to the fact that of one's sex oregon the colour of one's skin," explained the main researcher of the research, Juliana Castañeda Jiménez, an concern doctorate pupil astatine the Universitat Oberta de Catalunya (UOC) nether the supervision of Ángel A. Juan, of the Universitat Politècnica de València, and Javier Panadero, of the Universitat Politècnica de Catalunya.

According to Castañeda, "it is imaginable for algorithmic processes to discriminate by crushed of gender, adjacent erstwhile programmed to beryllium 'blind' to this variable."

The probe team—which besides includes researchers Milagros Sáinz and Sergi Yanes, some of the Gender and ICT (GenTIC) probe radical of the Internet Interdisciplinary Institute (IN3), Laura Calvet, of the Salesian University School of Sarrià, Assumpta Jover, of the Universitat de València, and Ángel A. Juan—illustrate this with a fig of examples: the lawsuit of a well-known recruitment instrumentality that preferred antheral implicit pistillate applicants, oregon that of immoderate recognition services that offered little favorable presumption to women than to men.

"If old, unbalanced information are used, you're apt to spot antagonistic conditioning with respect to black, cheery and adjacent pistillate demographics, depending upon erstwhile and wherever the information are from," explained Castañeda.

The sciences are for boys and the arts are for girls

To recognize however these patterns are affecting the antithetic algorithms we woody with, the researchers analyzed erstwhile works that identified sex biases successful information processes successful 4 kinds of AI: those that picture applications successful earthy connection processing and generation, determination management, code designation and facial recognition.

In general, they recovered that each the algorithms identified and classified achromatic men better. They besides recovered that they reproduce mendacious beliefs astir the carnal attributes that should specify idiosyncratic depending upon their biologic sex, taste oregon taste inheritance oregon intersexual orientation, and besides that they made stereotypical associations linking men with the sciences and women with the arts.

Many of the procedures utilized successful representation and dependable designation are besides based connected these stereotypes: cameras find it easier to admit achromatic faces and audio investigation has problems with higher-pitched voices, chiefly affecting women.

The cases astir apt to endure from these issues are those whose algorithms are built connected the ground of analyzing real-life information associated with a circumstantial societal context. "Some of the main causes are the under-representation of women successful the plan and improvement of AI products and services, and the usage of datasets with sex biases," noted the researcher, who argued that the occupation stems from the taste situation successful which they are developed.

"An algorithm, erstwhile trained with biased data, tin observe hidden patterns successful nine and, erstwhile operating, reproduce them. So if, successful society, men and women person unequal representation, the plan and improvement of AI products and services volition amusement sex biases."

How tin we enactment an extremity to this?

The galore sources of sex bias, arsenic good arsenic the peculiarities of each fixed benignant of algorithm and dataset, mean that doing distant with this deviation is simply a precise tough—though not impossible—challenge.

"Designers and everyone other progressive successful their plan request to beryllium informed of the anticipation of the beingness of biases associated with an algorithm's logic. What's more, they request to recognize the measures disposable for minimizing, arsenic acold arsenic possible, imaginable biases, and instrumentality them truthful that they don't occur, due to the fact that if they are alert of the types of discriminations occurring successful society, they volition beryllium capable to place erstwhile the solutions they make reproduce them," suggested Castañeda.

This enactment is innovative due to the fact that it has been carried retired by specialists successful antithetic areas, including a sociologist, an anthropologist and experts successful sex and statistics. "The team's members provided a position that went beyond the autonomous mathematics associated with algorithms, thereby helping america to presumption them arsenic analyzable socio-technical systems," said the study's main investigator.

"If you comparison this enactment with others, I deliberation it is 1 of lone a fewer that contiguous the contented of biases successful algorithms from a neutral standpoint, highlighting some societal and method aspects to place wherefore an algorithm mightiness marque a biased decision," she concluded.

More information: Juliana Castaneda et al, Dealing with Gender Bias Issues successful Data-Algorithmic Processes: A Social-Statistical Perspective, Algorithms (2022). DOI: 10.3390/a15090303

Provided by Universitat Oberta de Catalunya (UOC)

Citation: How to enactment an extremity to sex biases successful net algorithms (2022, November 23) retrieved 23 November 2022 from https://techxplore.com/news/2022-11-gender-biases-internet-algorithms.html

This papers is taxable to copyright. Apart from immoderate just dealing for the intent of backstage survey oregon research, no portion whitethorn beryllium reproduced without the written permission. The contented is provided for accusation purposes only.

/cdn.vox-cdn.com/uploads/chorus_asset/file/24020034/226270_iPHONE_14_PHO_akrales_0595.jpg)

English (US)

English (US)