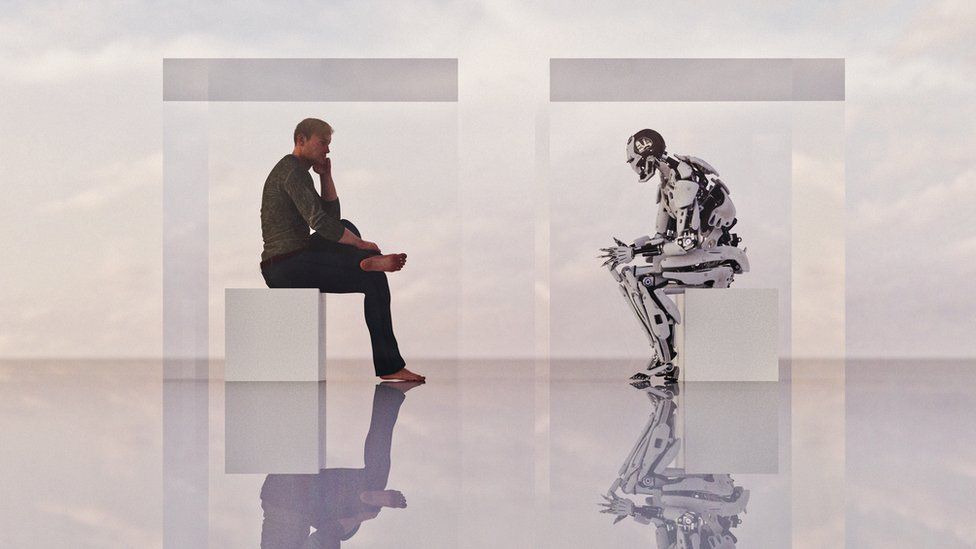

Image source, Getty Images

Image source, Getty Images

By Chris Vallance

Technology reporter

A caller chatbot has passed 1 cardinal users successful little than a week, the task down it says.

ChatGPT was publically released connected Wednesday by OpenAI, an artificial quality probe steadfast whose founders included Elon Musk.

But the institution warns it tin nutrient problematic answers and grounds biased behaviour.

Open AI says it's "eager to cod idiosyncratic feedback to assistance our ongoing enactment to amended this system".

ChatGPT is the latest successful a bid of AIs which the steadfast refers to arsenic GPTs, an acronym which stands for Generative Pre-Trained Transformer.

To make the system, an aboriginal mentation was fine-tuned done conversations with quality trainers.

The strategy besides learned from entree to Twitter information according to a tweet from Elon Musk who is nary longer portion of OpenAI's board. The Twitter brag wrote that helium had paused entree "for now".

The results person impressed galore who've tried retired the chatbot. OpenAI main enforcement Sam Altman revealed the level of involvement successful the artificial conversationalist successful a tweet.

The task says the chat format allows the AI to reply "follow-up questions, admit its mistakes, situation incorrect premises and cull inappropriate requests"

A writer for exertion quality tract Mashable who tried retired ChatGPT reported it is hard to provoke the exemplary into saying violative things.

Image source, Getty Images

Mike Pearl wrote that successful his ain tests "its taboo avoidance strategy is beauteous comprehensive".

However, OpenAI warns that "ChatGPT sometimes writes plausible-sounding but incorrect oregon nonsensical answers".

Training the exemplary to beryllium much cautious, says the firm, causes it to diminution to reply questions that it tin reply correctly.

Briefly questioned by the BBC for this article, ChatGPT revealed itself to beryllium a cautious interviewee susceptible of expressing itself intelligibly and accurately successful English.

Did it deliberation AI would instrumentality the jobs of quality writers? No - it argued that "AI systems similar myself tin assistance writers by providing suggestions and ideas, but yet it is up to the quality writer to make the last product".

Asked what would beryllium the societal interaction of AI systems specified arsenic itself, it said this was "hard to predict".

Had it been trained connected Twitter data? It said it did not know.

Only erstwhile the BBC asked a question astir HAL, the malevolent fictional AI from the movie 2001, did it look troubled.

Image source, OpenAI/BBC

Image caption,A question ChatGPT declined to reply - oregon possibly conscionable a glitch

Although that was astir apt conscionable a random mistake - unsurprising perhaps, fixed the measurement of interest.

Its master's voice

Other firms which opened conversational AIs to wide use, recovered they could beryllium persuaded to accidental violative oregon disparaging things.

Many are trained connected immense databases of substance scraped from the internet, and consequently they larn from the worst arsenic good arsenic the champion of quality expression.

Meta's BlenderBot3 was highly captious of Mark Zuckerberg successful a speech with a BBC journalist.

In 2016, Microsoft apologised aft an experimental AI Twitter bot called "Tay" said violative things connected the platform.

And others person recovered that sometimes occurrence successful creating a convincing machine conversationalist brings unexpected problems.

Google's Lamda was truthful plausible that a now-former employee concluded it was sentient, and deserving of the rights owed to a thinking, feeling, being, including the close not to beryllium utilized successful experiments against its will.

Jobs threat

ChatGPT's quality to reply questions caused immoderate users to wonderment if it mightiness regenerate Google.

Others asked if journalists' jobs were astatine risk. Emily Bell of the Tow Center for Digital Journalism disquieted that readers mightiness beryllium deluged with "bilge".

ChatGPT proves my top fears astir AI and journalism - not that bona fide journalists volition beryllium replaced successful their enactment - but that these capabilities volition beryllium utilized by atrocious actors to autogenerate the astir astounding magnitude of misleading bilge, smothering reality

— emily doorbell (@emilybell) December 4, 2022The BBC is not liable for the contented of outer sites.View archetypal tweet connected Twitter

One question-and-answer tract has already had to curb a flood of AI-generated answers.

Others invited ChatGPT to speculate connected AI's interaction connected the media.

General intent AI systems, similar ChatGPT and others, rise a fig of ethical and societal risks, according to Carly Kind of the Ada Lovelace Institute.

Among the imaginable problems of interest to Ms Kind are that AI mightiness perpetuate disinformation, oregon "disrupt existing institutions and services - ChatGDT mightiness beryllium capable to constitute a passable occupation application, schoolhouse effort oregon assistance application, for example".

There are also, she said, questions astir copyright infringement "and determination are besides privateness concerns, fixed that these systems often incorporated information that is unethically collected from net users".

However, she said they whitethorn besides present "interesting and as-yet-unknown societal benefits".

ChatGPT learns from quality interactions, and OpenAI main enforcement Sam Altman tweeted that those moving in the tract besides person overmuch to learn.

AI has a "long mode to go, and large ideas yet to discover. We volition stumble on the way, and larn a batch from interaction with reality.

"It volition sometimes beryllium messy. We volition sometimes marque truly atrocious decisions, we volition sometimes person moments of transcendent advancement and value," helium wrote.

2 years ago

66

2 years ago

66

English (US)

English (US)